UEFI firmware from five of the leading suppliers contains vulnerabilities that allow attackers with a toehold in a user's network to infect connected devices with malware that runs at the firmware level.

The vulnerabilities, which collectively have been dubbed PixieFail by the researchers who discovered them, pose a threat mostly to public and private data centers and possibly other enterprise settings. People with even minimal access to such a network—say a paying customer, a low-level employee, or an attacker who has already gained limited entry—can exploit the vulnerabilities to infect connected devices with a malicious UEFI.

Short for Unified Extensible Firmware Interface, UEFI is the low-level and complex chain of firmware responsible for booting up virtually every modern computer. By installing malicious firmware that runs prior to the loading of a main OS, UEFI infections can’t be detected or removed using standard endpoint protections. They also give unusually broad control of the infected device.

Five vendors, and many a customer, affected

The nine vulnerabilities that comprise PixieFail reside in TianoCore EDK II, an open source implementation of the UEFI specification. The implementation is incorporated into offerings from Arm Ltd., Insyde, AMI, Phoenix Technologies, and Microsoft. The flaws reside in functions related to IPv6, the successor to the IPv4 Internet Protocol network address system. They can be exploited in what’s known as the PXE, or Preboot Execution Environment, when it’s configured to use IPv6.

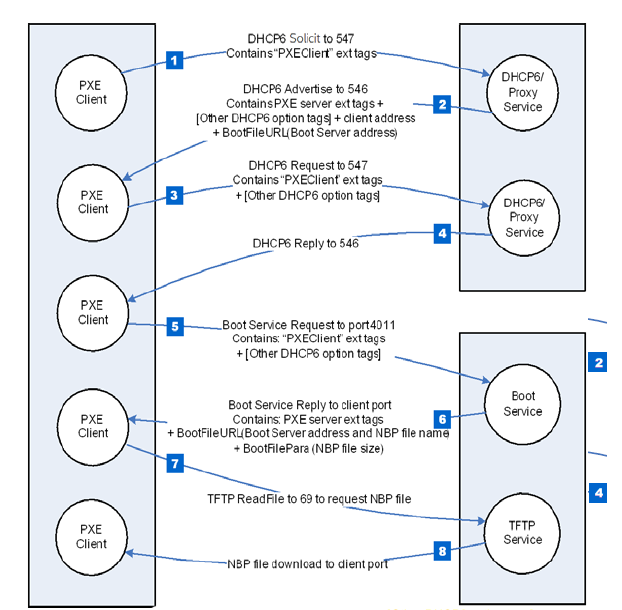

PXE, sometimes colloquially referred to as Pixieboot or netboot, is a mechanism enterprises use to boot up large numbers of devices, which more often than not are servers inside of large data centers. Rather than the OS being stored on the device booting up, PXE stores the image on a central server, known as a boot server. Devices booting up locate the boot server using the Dynamic Host Configuration Protocol and then send a request for the OS image.

Loading comments...

Loading comments...

PXE is heavily used for large desktop deployment environments as well. System Center and other bulk deployment platforms use it to boot off the LAN to dump a machine with a new image. Anyone running such an environment is probably at risk as well.

Indeed. AMI and phoenix provide quite a lot of desktop PC EFI firmware, and they're on the vulnerable list, so that's going to be a giant headache to patch especially when you have a variety of hardware from different waves of purchasing.

On the other hand, in that sort of environment the users already have physical access to the PCs which opens up so many other methods of attack, this is just one more on the pile of what an in-house staff member could do if they were so inclined and had the capability. At least those networks tend to be only accessible by someone on the premises, so even with a network-level attack (as opposed to a physical access one) it's not like random people outside the org have access, unlike a cloud hosting service. Nor is PXE in constant use every time a PC boots, as the OS is local, only for reimaging, nor indeed are we using IPv6 internally for PC networks, which is where this PXE vulnerability is.

(IPv4 is ample for a network our size, and our ISP still does not support IPv6 - we have a test network with a 6-in-4 gateway so we're ready for when it's eventually needed)

We also only have our PXE deployment on a small subsection of VLANs, not the whole org, and certainly not on the general-access VLANs such as those used by BYOD laptops, so hopefully the vector for an infected personal device doing something automated is limited, but still, sigh.

Basically we've got large venues full of PCs that are re-imaged every three to six months. By default the PC does a network boot check, driven by DHCP variables, that uses PXE to check with System Center if there is a deployment advertisement for that machine. If yes, then dump the advertised task sequence, if not, exit PXE and boot the first disk. Our servers have network boot disabled, we do know that risk. ;)

Budget constraints = no NAC/NAP solution, therefore no regular deployment VLAN because of static VLANs on the ports. Yes we should be doing press F12 to select boot device and defaulting to hard disk, but would probably get pushback from the interns who would then have to run around to 200 machines and manually intervene.

This probably needs to change soon. I'm thinking something in the task sequence that can switch the UEFI to boot first disk/Windows Boot Manager first and then the ability to change that remotely when re-imaging is necessary.

Suggestions would be appreciated. This evolved out of System Center's recommended process from several years ago.